Nothing seemed off about Rep. Zack Stephenson's testimony to a House committee on why the Minnesota Legislature should crack down on the use of so-called deep fake technology.

Using artificial intelligence, the technology can manipulate audio and video to create lifelike recreations of people saying or doing things that never actually happened, he said. Deep fakes have been used to create sexually explicit videos of people or fake political content intended to influence elections.

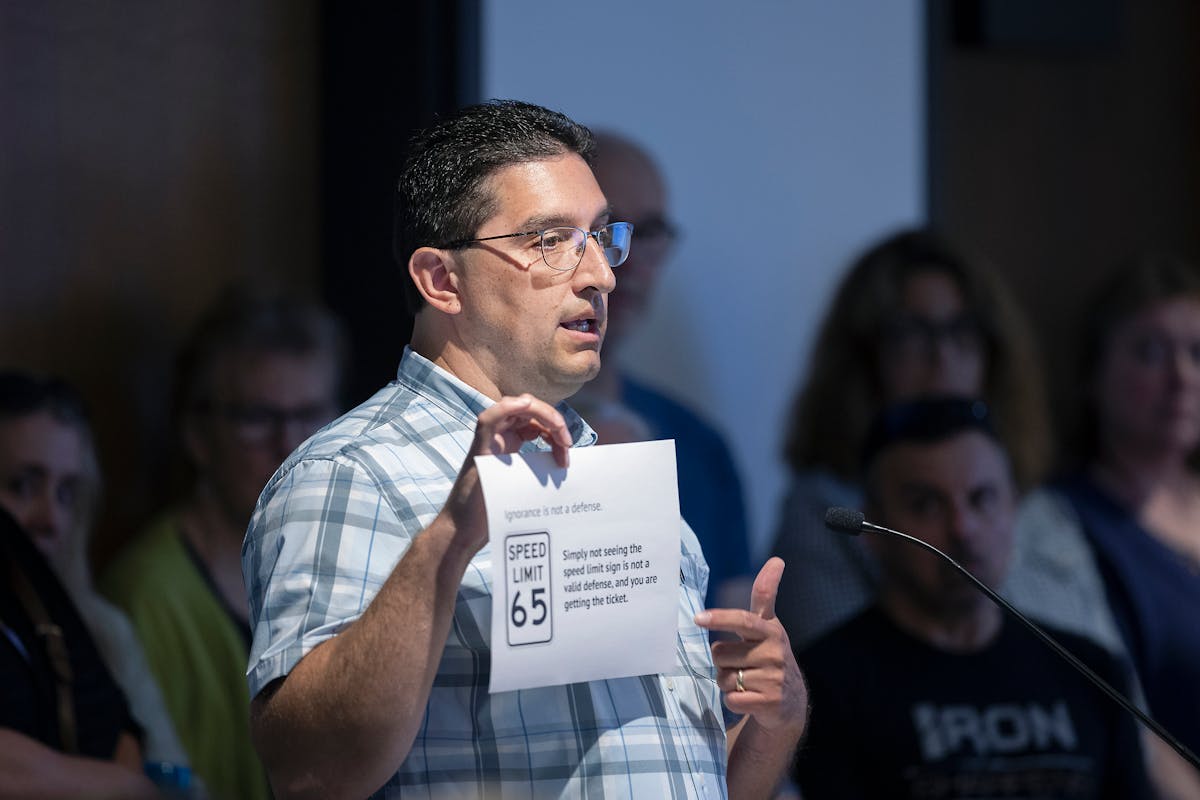

Then, the Coon Rapids Democrat paused for a reveal. His comments up to that point were written by artificial intelligence software ChatGPT with a one-sentence prompt. He wanted to demonstrate "the advanced nature of artificial technology."

It worked.

"Thank you for that unsettling testimony," responded Rep. Mike Freiberg, DFL-Golden Valley, chair of the Minnesota House elections committee.

The proposal represents a first attempt from Minnesota lawmakers to clamp down on the spread of disinformation through the technology, particularly when it comes to influencing elections or in situations where it's used to distribute fake sexual images of someone without the person's consent.

It's already a crime in Minnesota to publish, sell or disseminate private explicit images and videos without the person's permission. But that revenge porn law was written before much was known about deep fake technology, which has already been used in Minnesota to disseminate realistic — but not real — sexual images of of people.

Stephenson's bill would make it a gross misdemeanor to knowingly disseminate sexually explicit content using deep fake technology that clearly identifies a person without permission.

The proposal is modeled after what California and Texas have already done to try and tamp down on the use of the technology in these situations, as well as when deep fakes are used to influence an election. His bill would create penalties for someone who uses the technology up to 60 days before an election to try and hurt a candidate's credibility or in other ways to influence voters.

Deep fake videos have yet to become a major problem in state or national elections, but national groups have been sounding the alarm that the technology has developed rapidly in recent years.

Republicans on the committee backed the idea, concerned about the possibility for the technology to create fake information that can spread rapidly on social media.

"I'd seen a video, artificial intelligence, where they literally listened to someone's voice for a short time, 20 or 30 seconds, and then they were able to duplicate that voice," said Rep. Pam Altendorf, R-Red Wing. "At the same time it was completely fascinating to me, it was also completely horrifying to think of what people could do with that."

The House Elections Finance and Policy Committee advanced the proposal on a voice vote last week.

"Hopefully we can get this solved before Skynet becomes self-aware," Freiberg said after the vote.

Former DFL Senate Majority Leader Kari Dziedzic dies of cancer at age 62

How the Star Tribune is covering the 2024 election

Fact check: Walz and Vance made questionable claims during only VP debate

In Tim Walz's home city, opposing groups watch him debate on the national stage